First Forays into Rev City and Automatic Transcription

The Revolutionary City seeks to digitize all manuscript material to, from, or about Philadelphia during the American Revolutionary War (1774-1783). As a public-facing portal, the project hopes to reach a broad audience of students, educators, and interested members of the public who want to learn more about the founding of the United States.

From the beginning, transcription has been a big stumbling block for the project. As we started reaching out to educators, they consistently told us we would need to provide all documents in a transcribed form in order to reach our target audiences. Reading handwritten historical documents is hard! However, as the portal has ballooned to over 46,000 manuscript pages—and counting!—, we knew that transcribing these documents by hand would never be possible. It would simply take too long.

Instead, we asked whether a computer could transcribe them, or at least speed things up.

The process of automatically decoding images of written language using a computer is known as optical character recognition (OCR). For computer scientists, OCR is largely considered a “solved” problem for printed text in Western European languages, and countless technologies in our everyday lives depend on it. If you’ve ever copied and pasted text from a photo in your phone, scanned your passport at an airport, or searched a PDF, you’ve used a form of OCR. Despite these successes, OCR remains challenging for non-Western printed scripts and for all forms of handwriting. In particular, connected scripts such as Arabic, scripts used in South Asia, and cursive scripts from European languages are hard to transcribe using OCR.

Handwritten text is such a challenge for OCR that computer scientists have given it its own name: Handwritten text recognition (HTR).

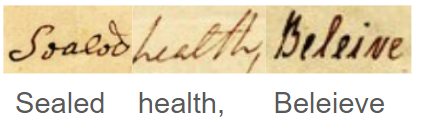

Fortunately, massive steps forward have been made in recent years to crack the code for HTR. There are now a number of existing softwares that can help transcribe handwritten documents. Most of these models rely on some form of deep learning, a type of machine learning through which computers recognize and reproduce patterns. While these technologies are often grouped under the moniker “artificial intelligence,” all they do is encode patterns as probabilities. The computer cannot “see” or “read” letters in the same way that a human being can, but associates the contours of visual material on the page with the probability of being a certain letter.

To “teach” the computer to recognize handwriting, we need to provide it with high-quality samples of correct output. We call these samples “training data.” The computer will then run an algorithm over the training data and create what is known as a “model.” This model encodes the probabilities the computer uses to decode the symbols on the page. Since the computer can only learn what is in its training data, we need lots of high-quality training data to produce an accurate model. Any and every possible variation of a letter must be accounted for in the training set.

To help us create these models, we are using two tightly-coupled pieces of software. Both of these programs have been developed by the Scripta project from the Université Paris Science et Lettres. To train models and run HTR processes, we are using kraken. Kraken is a stand-alone HTR program that allows users to train HTR models and perform all steps of the HTR process. Kraken was originally designed to work with Arabic, but it has shown great success in English cursive, as well as a variety of other scripts. To generate training data, we are using eScriptorium. eScriptorium not only provides a wrapper for running kraken, but also has a user interface to manually generate training data and to correct computationally generated output.

Among the many choices for HTR today, kraken and eScriptorium appealed to us for two reasons. First, these programs are open source, which means that anyone with sufficient knowledge of programming can use them for free and contribute to them. Second, the community of users around these softwares has a strong commitment to an open and transparent practice of model generation. Many users release both their models and the training data used to generate the models. This means that we can verify what is happening when we train these models and retain control over the process. Many AI tools function as a black box and obscure their processes from end users. For this project, we hope to avoid that and engage in an open and transparent process.

However, there are some downsides. Because eScriptorium is an open-source, distributed software, the APS must maintain its own installation on its own servers. Additionally, training models is fairly computationally expensive. Without access to a high-performing graphical processing unit (GPU), training cannot be completed in a reasonable amount of time. This is a challenge for institutions of the APS’s size that want to experiment with machine learning tools. While university libraries and DH centers have access to high-performance computing clusters, independent research libraries make do with comparatively fewer resources. Fortunately, for medium-sized projects, a reasonably powerful gaming computer has a GPU that will do the job just fine. I was able to do initial experiments on my husband’s gaming computer, which when trained overnight only interrupted his gaming for a few hours. Once that proved the viability of the software, the APS invested in a computer with a moderately powerful GPU to begin experimenting with HTR. If these experiments prove successful, we can look into more powerful hardware to handle even larger projects.

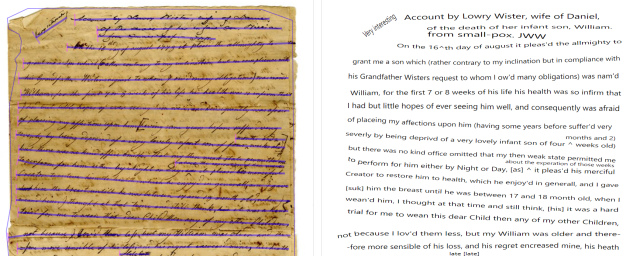

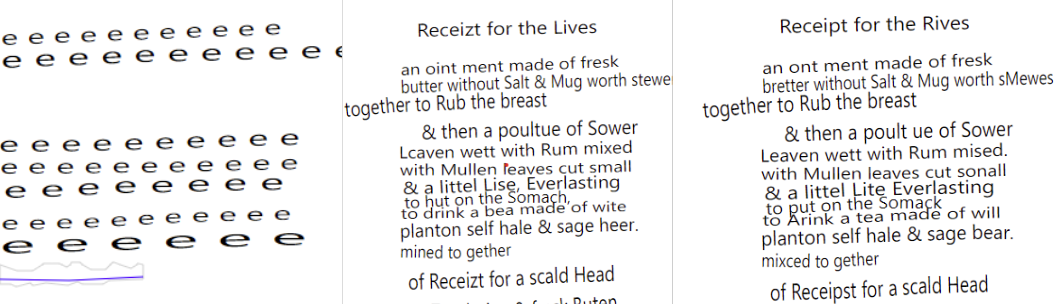

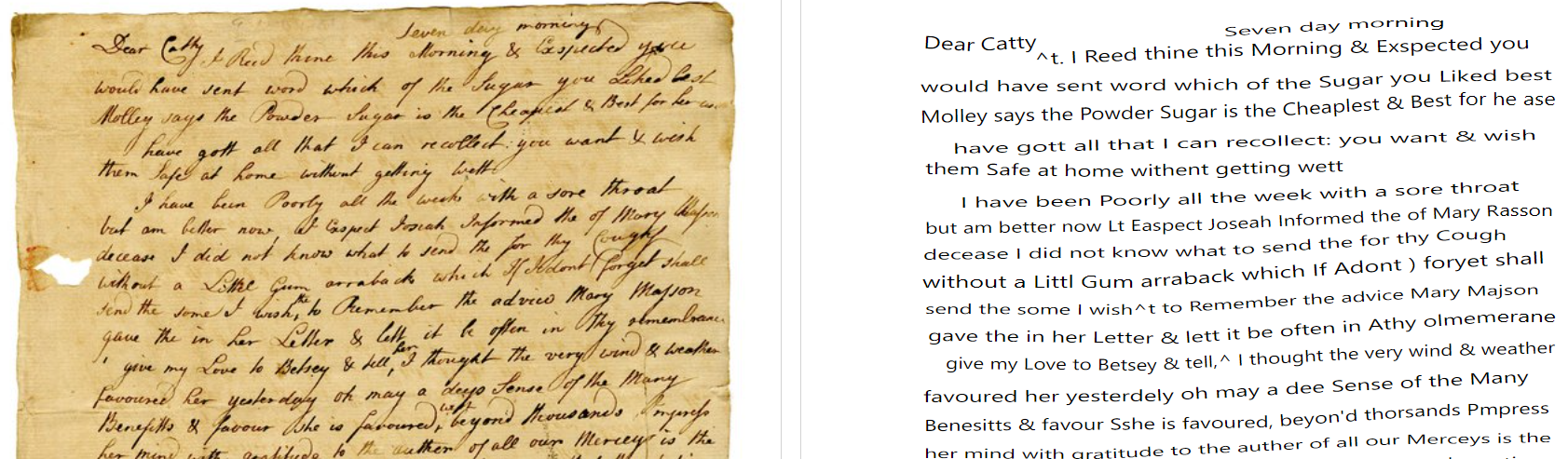

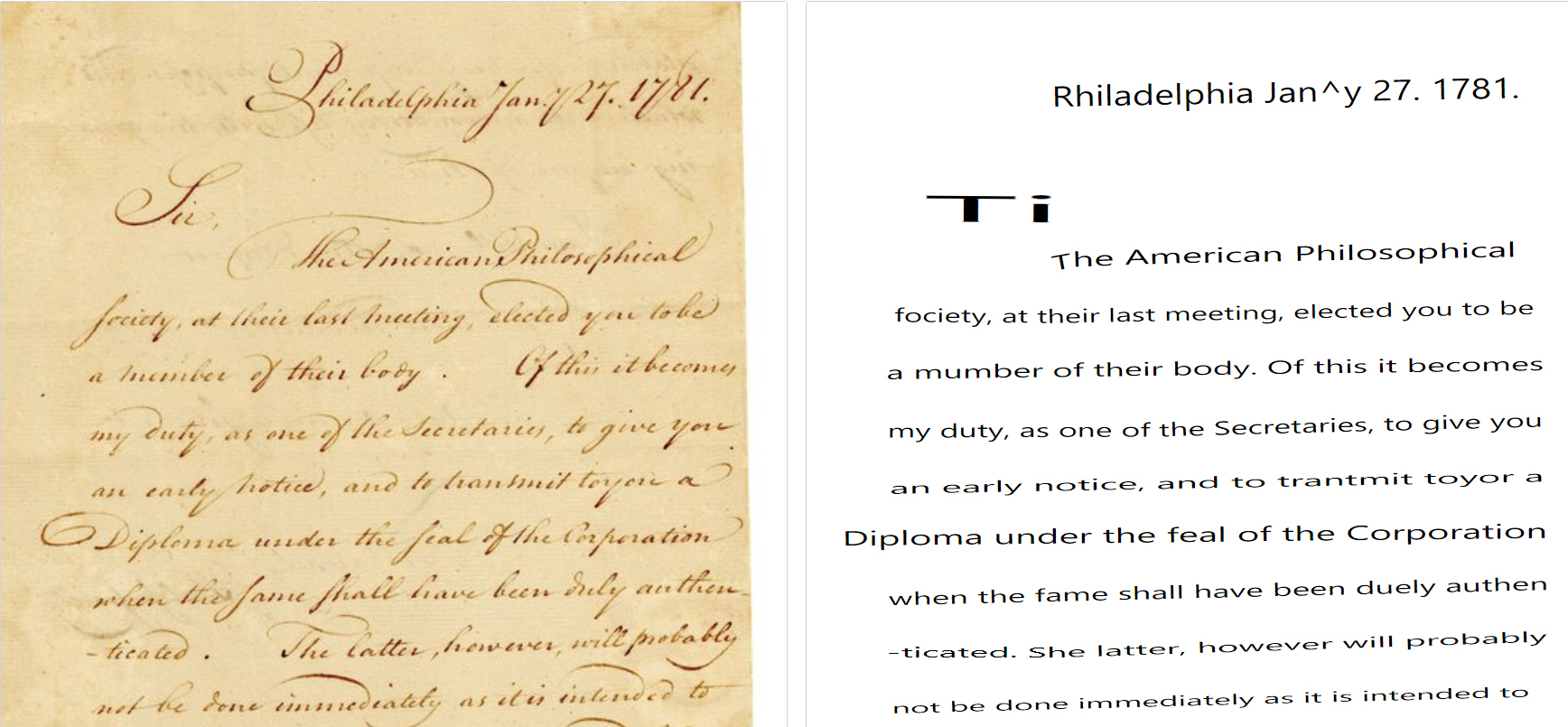

So where are we at? Right now, we have over 80,000 lines of training data. The largest model we have trained incorporates over 45,000 lines of cleaned training data. This model achieves an accuracy of around 94%, though the accuracy drops quite a bit if the material does not resemble something in the training set. As you can see from the examples in this post, the models still require a bit of manual correction; however, use of the HTR software significantly speeds up the transcription process. The machine-generated transcriptions may also serve as an aid to help beginning historians learn to read historical documents.

Once we have completed cleaning all of our training data, we will release the data as well as the model we train from it. This will allow other institutions and historians to use these tools as well.